Written by: prateek, roshan, siddhartha and linguine (Marlin), krane (Asula)

Thanks to jesse, jim, lamby, nick, ren, rajiv and yangwao for their thoughtful feedback and comments.

Trusted Execution Environments (or TEEs) have become increasingly popular since Apple announced its Private Cloud and NVIDIA made Confidential Computing available in GPUs. Their confidentiality guarantees help secure user data (which may include private keys) while the isolation properties ensure that the execution of programs deployed on them can’t be tampered with–whether by a human, another program or the operating system. It should thus be no surprise that they have also found product-market fit in the fast-evolving crypto x AI space.

As with any new technology, TEEs are arguably going through a period of unchecked optimism and experimentation. This piece hopes to serve as a practical guide for the discerning developer to understand what TEEs are, their security model, common pitfalls and best practices to use them safely. Although fairly technical and builder-oriented, we hope that the larger community can use this guide to ask developers the right questions and promote a safer ecosystem.

NB: In order to make the text accessible, we knowingly replace well-known TEE terminologies with simpler equivalents and take similar liberty for the explanation of domain-specific concepts.

Is there a reason all software shouldn’t just move to TEEs?

— Illia (root.near) (🇺🇦, ⋈) (@ilblackdragon) December 26, 2024

TEE enable private verifiable compute at low % overhead.

What are TEEs?

TEEs are an isolated environment in a processor or data center where programs can run without any interference from the rest of the system. This is achieved through careful system design which enforces strict access control policies on how other external programs can interact with applications running in them. TEEs are ubiquitous in mobile phones, servers, PCs and cloud environments making them very accessible and affordable.

The above paragraph may sound vague and abstract, but it is intentionally so. Different server and cloud vendors implement TEEs differently.

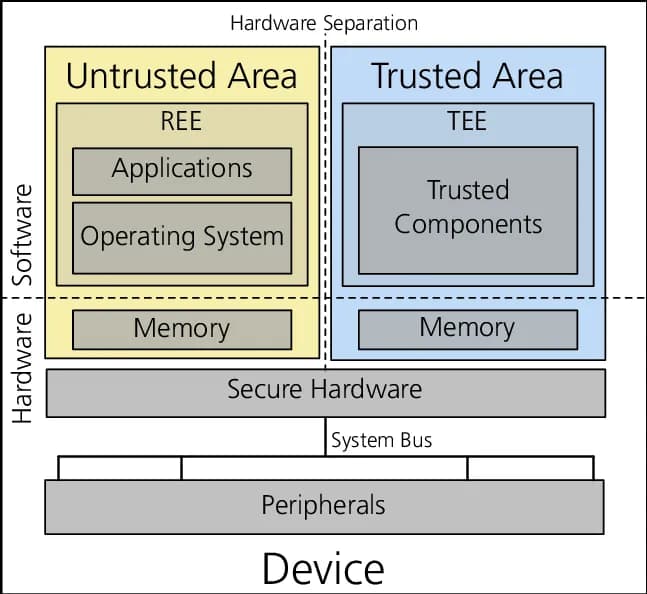

TEEs Illustrated. Source

Most readers are likely using devices that they logged into using biometric data. Ever wondered why no malicious app, website or jailbroken OS has been able to leak a database of such private data (stolen from the device, not a government/corporate server)? It is because in addition to the data being encrypted, the circuitry in your device simply wouldn’t allow programs to access the areas of memory and processor that process such data! Biometrics on phones and computers are stored on TEEs.

Hardware ledgers are another example crypto users would be familiar with. A ledger connects to a machine for power and sandboxed communication, but stores seed phrases itself.

In both the above cases, users trust the device manufacturer to correctly design chips and provide appropriate firmware updates to prevent confidential data from being exported or viewed.

The security model

Unfortunately, the large variety of TEE implementations means each implementation (Intel SGX, Intel TDX, AMD SEV, AWS Nitro Enclaves, ARM TrustZone) requires its own analysis. We’ll largely talk about Intel SGX, TDX and AWS Nitro for the rest of this piece as they are most relevant when it comes to web3 for their popularity, extent of available dev tooling and ease to work with.

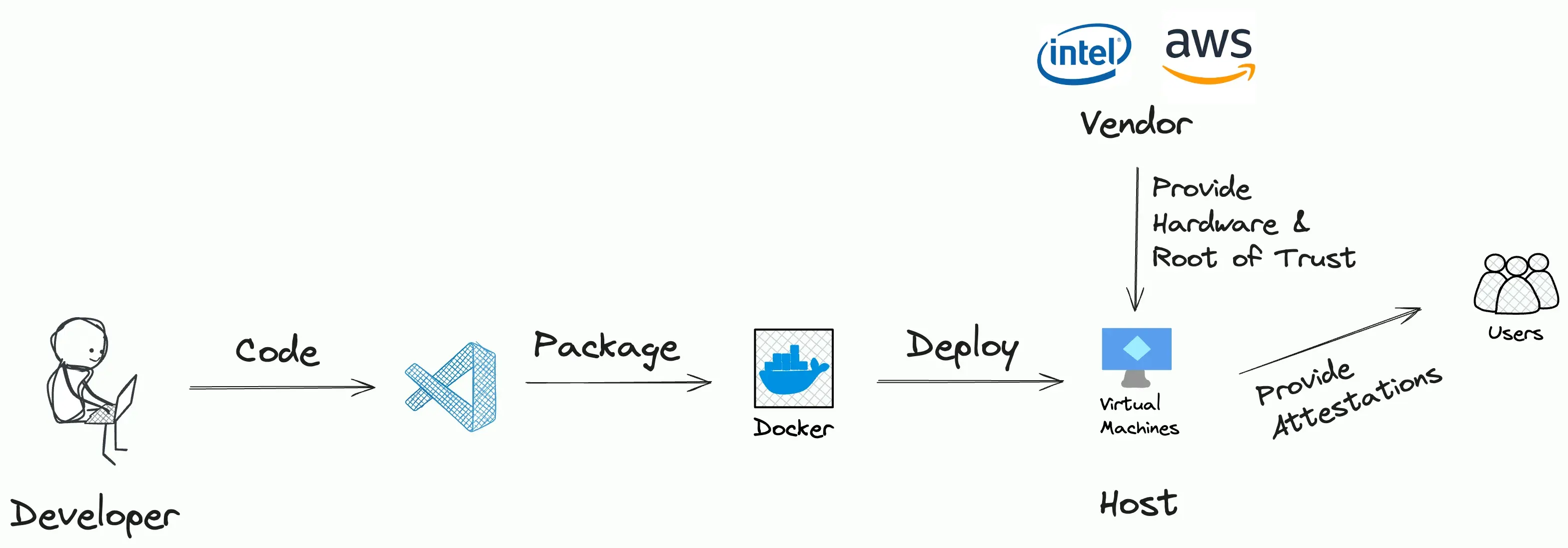

A typical workflow for an application deployed in a TEE is as follows:

- A ‘developer’ writes some code which they may or may not have open-sourced.

- They then package the code in an Enclave Image File (EIF) that can be run within a TEE (designed and manufactured by a ‘vendor’).

- The EIF is hosted on someone’s machine (the ‘host’). It is possible that the developers themselves also serve as the host.

- Users can then interact with the application through predefined interfaces.

App Deployment Workflow with TEEs.

Clearly, there are 3 potential points of trust:

- The developer: What does the code that was used to prepare the EIF even do? Does it just log all the data the user shares with the program and upload it to a remote server?

- The host: Is the person operating the machine running the same EIF as publicized and intended? Are they even running it in a TEE?

- The vendor: Is the design of the TEE even secure? Is there an intentional backdoor that leaks all data to the vendor?

Thankfully, eliminating the developer and host as points of failure are exactly what well-designed TEE applications are intended to do. They do so using Reproducible Builds and Remote Attestations.

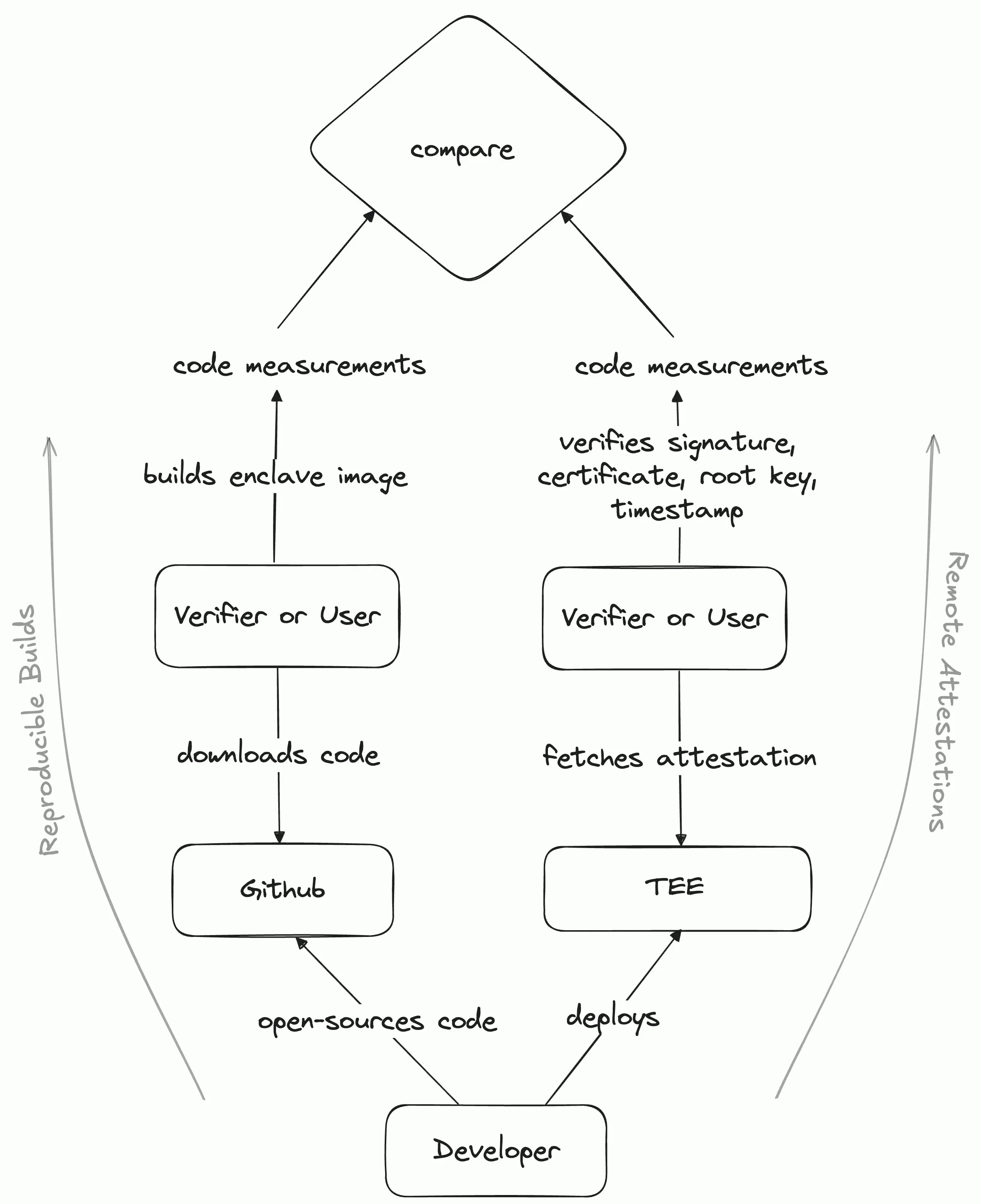

Reproducible Builds: A typical program today includes a number of packages. These packages change with time as new updates and patches are released. If the identifier of a program is its entire code’s hash, any change in the version number of a package used or the way the dependency tree is created before calculating the measurement (code hashes or Platform Configuration Register values), alters the measurement and thus, the identifier of the program.

As the name suggests, the concept of reproducible builds involves following a set of standards such that a certain version of code when built using the standardized pipeline always returns the same code measurement.

Nix is a popular tool to build reproducible executables. Once the source code is public, anyone can inspect or audit the code to ensure the developer has not inserted anything untoward and then build it to arrive at the same code measurements that the developer would have deployed in production. But how do we know the measurement of the binary that’s running in the TEE? Enter Remote Attestations!

If you're a TEE then show us your remote attestation

— Andrew Miller (@socrates1024) December 25, 2024

Remote Attestations: Remote Attestations are signed messages from the TEE platform (a trusted party) that contain measurements of the program, the TEE platform version, etc. It lets an external observer know that a certain program (with specified measurements) is being executed in a secure location (real TEE with a secure platform version) that no one can access.

Attestation Verification Workflow with TEEs.

Together, Reproducible Builds and Remote Attestations let an external observer know the precise code that’s being run within a TEE along with the TEE platform version, preventing the developer or the host from lying about the code being run or where it is running.

Let's clarify: the main threat of the Intel SGX Root Provisioning Key leak is not an access to local enclave data (requires a physical access, already mitigated by patches, applied to EOL platforms) but the ability to forge Intel SGX Remote Attestation

— Mark Ermolov (@_markel___) August 28, 2024

However, the vendor is the ultimate root of trust in the case of TEEs. If it wishes to be malicious (or creates vulnerable hardware), it can always sign on to attestations for programs running outside a TEE (which may or may not be the program the user thinks they are interacting with). Either by itself or in collusion with the developer or host, it can pass off the execution of altered code to be validly attested. Threat models which consider the vendor a possible attack vector should thus avoid relying solely on TEEs and are better off coupling them with zero-knowledge proofs or consensus mechanisms.

The allure of TEEs

crypto x AI loves TEEs.

The properties that have made TEEs especially popular, especially for the deployment of AI agents, in our opinion are:

-

Metrics: TEEs can run LLM models with performance and cost overheads similar to that of a normal server. zkML is no match.

-

GPU support: NVIDIA provides confidential computing support in its latest line of GPUs (Hopper, Blackwell, etc).

-

Correctness: LLMs are non-deterministic; multiple runs of the same query give different results. Consequently, multiple nodes (including an always online watcher trying to create a fraud proof) may never come to consensus on the correct result. On the other hand, code being isolated in a TEE means that the host can’t manipulate its execution, which means programs run as written. This makes TEEs far more suitable than opML or consensus for inferencing.

-

Confidentiality: Data inside a TEE isn’t visible to external programs. As a result, private keys generated or received inside a TEE remain secure (encumbered). This property can be used to give users the assurance that any message signed by a key originated inside the TEE or was vetted by the constraints set by the code running in the TEE.

This property can be further used to encrypt persistent state which can be stored on IPFS/Filecoin or to encumber API keys or Twitter passwords as was demonstrated by tee_hee_he.

Imo people who pitch ZK against TEEs reveal themselves to understand neither. These technologies enable completely different things. TEEs is the only option for private computation over shared state, such as blockspace auctions.

— Hasu⚡️🤖 (@hasufl) October 19, 2024

-

Networking: With the right tooling, programs running in a TEE can securely access the internet (without revealing the queries or responses to the host while still giving 3rd parties the assurance of correctly retrieved data). This is useful to retrieve information from 3rd party APIs but also to outsource computations to trusted but proprietary model providers.

-

Write access: As opposed to zk solutions, code running in a TEE can construct messages (whether tweets or transactions) and use networking access to send them to APIs or RPCs.

-

Easy development: The right frameworks and SDKs allow code written in any language to be deployed in a TEE as easily as a cloud server.

There are no "clever" solutions. TEEs are the only available technology to build decentralized, fast, and private systems today.

— @bertcmiller ⚡️🤖 (@bertcmiller) October 12, 2024

For better or worse, there simply exists no alternative to TEEs that satisfy the above requirements of a web3 AI developer. Similar to how it is believed that higher throughput enables new use-cases for blockchains, TEEs expand the surface area of what onchain applications can do, potentially unlocking new use cases.

TEEs aren’t a magic bullet that can fix broken system design

Programs operating in a TEE are still vulnerable to a range of attacks and bugs. Just like smart contracts, they are susceptible to a range of issues. For simplicity, we categorize possible loopholes as below:

- Developer negligence

- Runtime vulnerabilities

- Architectural flaws

- Operational oversight

Developer negligence

Whether intentional or unintentional, developers can weaken the security guarantees of programs running in TEEs through their choices, actions and/or omissions. These include:

-

Opaque code: The security model of a TEE relies on verifiable behaviour. Transparency of code is essential to ensure that the behaviour can be reasoned about by a third party outside the TEE. It lets users verify the invariants that the TEE is expected to uphold throughout its lifetime.

-

Poor verification of measurements: In continuation to the point above, even if the code is public, if no third-party rebuilds the code, computes the Platform Configuration Register (PCR) values/binary hash/measurements and then checks against the measurements in remote attestations, the system is broken. It’s akin to receiving a zk proof but not verifying it.

Coprocessors can automate the verification process through a smart contract. But if the canonical measurements to be verified against in the contract, or the contract itself can be switched and upgraded, the security of the system can be compromised.

-

Insecure code: Even if you are careful to generate and manage secrets correctly within the TEE, the code might expose it through endpoints or make requests that expose secrets to the outside world. In addition, the code might contain vulnerabilities that allow attackers to bypass behavioural protections placed on the TEE.

Reasoning about the behaviour of a TEE is only as good as the reasoning abilities of the tools being used. In a world where almost nothing is formally verified, bugs are an inevitable part of software development. It demands high standards in the software development and assurance process similar to smart contract development than traditional backend development.

-

Supply chain attacks: Modern software development uses a lot of third party code in the form of libraries pulled from repositories on the internet. Supply chain attacks in the form of insecure or poisoned libraries pose a major threat to the integrity of the TEE.

Runtime vulnerabilities

Even a careful developer with a robust codebase may fall prey to attacks that can be executed at runtime. Developers have to think through whether any of the following can affect the security guarantees of their program.

-

Dynamic code: It might not always be possible to keep all code transparent. Sometimes the use case inherently requires executing opaque code loaded into the TEE dynamically at runtime. Such code can easily leak secrets or break invariants and utmost care has to be taken to protect against this.

As an example, Oyster Serverless allows users to load and execute functions in multi-tenanted always-on instances. Security in such a system effectively comes from sandboxing which is discussed later below.

-

Dynamic data: Most applications use external APIs and other data sources during the course of execution. The security model expands to include these data sources which are now on the same footing as oracles in DeFi, where incorrect, or even stale data can lead to disaster.

E.g. In the case of AI agents, there’s an excessive reliance on LLM services like Claude.

-

Insecure and flaky communication: TEEs run on a host that has the ability to control everything going in and out of the TEE. In security terms, the host is effectively a perfect Man-in-the-Middle (MitM) between the TEE and external interactions. It not only has the ability to peek into connections and see what is being sent, it can also censor specific IPs, throttle connections and inject packets in connections designed to trick one side into thinking it came from the other side.

E.g. Running a matching engine inside a TEE that can process encrypted transactions can’t be used to provide fair ordering guarantees as the router/gateway/host can still drop, delay or prioritize packets based on the IP address they originate from.

-

Grinding attacks: A developer may expose endpoints in the application to prompt the model. It could then keep dropping response packets until the model responds in a way favourable to the developer’s expectations. Even when such an endpoint isn’t exposed, if appropriate precautions aren’t taken, the developer could run the model locally to map what sort of input leads to what kind of output.

E.g. A twitter agent deployer could keep prompting the bot to say a certain phrase or worse, set the parameters of a DeFi protocol in a malicious way.

Architectural flaws

Zooming out, even before starting to write any code, developers should think through the stack they plan to use to build the enclave application. Decisions are much easier to make when priorities are clear from the start!

-

Large surface area: Surface area refers to the amount of code that needs to be perfectly secure to avoid compromising its execution. Code with a large surface area is very hard to audit and might hide bugs or exploitable vulnerabilities. This is also usually in tension with developer experience since good developer experience usually means adding layers of abstractions, but these very abstractions can compromise the program.

For example, enclaves that rely on Docker have a much larger surface area compared to enclaves that don’t. Enclaves that rely on a full-fledged operating system have a much larger surface area compared to enclaves that use a minimal operating system, which in turn have a larger surface area compared to something like a unikernel. Better yet are the ones that do not require an operating system at all.

-

Portability and liveness: In Web3, it is important for applications to be censorship-resistant. It should be possible for anybody to spin up TEEs and take over from an inactive operator and make the application portable. The biggest challenge here is the portability of secrets. Some TEEs include key derivation mechanisms, however they are usually limited to the same machine which is not enough to maintain portability.

-

Insecure secret management: TEEs usually hold secrets, if only to authenticate themselves and their interactions with the outside world. For example, a popular use case with the rise of AI agents is TEE based agents that have their own encumbered wallet that is not known to a third party.

On its face, it seems simple to just generate secrets inside the TEE, but this comes with the downside of the secrets being lost if the TEE stops running. For production applications, it is essential to have continuity in secrets so new TEEs can take over from old ones. However, if done insecurely, it breaks security assumptions and can leak secrets.

-

Insecure root of trust: Right on the heels of insecure secret management, there’s also the issue of how their public counterparts are verified. For example, with an AI agent running inside a TEE, how do you verify that a given address belongs to the agent? If not careful, the real root of trust might end up being an external third party or Key Management Service that is able to expose secrets instead of the TEE itself.

Operational oversight

Last but not the least, there are some practical considerations on how the TEE is operated by the host:

-

Insecure platform versions: TEE platforms receive occasional security updates that are reflected as platform versions in remote attestations. If your TEE is not running on a secure platform version (and the rest of your system doesn’t enforce it), known attack vectors can be leveraged to extract secrets from the TEE. To make it worse, your TEE might run on a secure platform version today, but it might become insecure tomorrow.

-

No physical security: Despite your best efforts, TEEs might be vulnerable to side channels and other esoteric attacks, usually requiring physical access and control of the TEE. Therefore, physical security is an important defense-in-depth layer to ensure such attacks are not trivially possible, and to provide ample time to deploy mitigations against zero days.

A related concept is Proof-of-Cloud where you get a proof that the TEE is running in the datacenter of a cloud that has physical security in the form of guys with guns at the door.

I talk about these points some times, under the name "proof of cloud" and @hdevalence "guy with a glock" threat model. I like the idea you could give a ZK proof that one from a list of reputable clouds is hosting your TEE on joining the network

— Andrew Miller (@socrates1024) July 24, 2024

Until now, we’ve primarily described features that make TEEs exciting, especially for crypto x AI. We’ve also laid out a laundry list of security issues that can arise with TEE deployments due to a multitude of factors. In the next section, we’ll describe different levels of security developers can impart to their TEEs when deploying them.

Building enclaves securely

We divide our recommendations as follows:

- Most secure scenario

- Necessary precautions to take

- Use-case dependent suggestions

Most secure scenario: No external dependencies

Creating highly secure applications can involve eliminating external dependencies like external inputs, APIs, or services, reducing the attack surface. This approach ensures that the application operates in a self-contained manner, with no external interactions that could compromise its integrity or security. While this strategy may limit functionality, it offers high security in scenarios where trust and confidentiality are preferred.

This level of security is achievable for several crypto x AI use cases if the models are run locally.

Necessary precautions to take

Irrespective of whether or not the application has external dependencies, the following are a must!

-

Treat TEE apps as smart contracts rather than backend applications; update less often, test rigorously

Code to run within secure enclaves should be treated with the same rigor applied to writing, testing, and updating smart contracts. Like smart contracts, TEEs operate in a highly sensitive and non-tamperable environment where bugs or unexpected behavior can lead to severe consequences, including the complete loss of funds. Thorough audits, extensive testing, and minimal, carefully reviewed updates are essential to ensure the integrity and reliability of TEE-based applications.

The role of verifiable logs and verifiable states 👉 Transforming "something" that is difficult to verify into "verifiable" through the organization of data structures similar to smart contracts. pic.twitter.com/e1yYYnj7Od

— CP | evm++/acc (@CP2426_) December 26, 2024 -

Code should be audited and the build pipeline checked

Building an enclave image is a critical step in running a secure application within a Trusted Execution Environment (TEE). The security of the application hinges not just on the code itself but also on the tools and processes used during the build. A secure build pipeline is essential to prevent vulnerabilities from being introduced, as TEEs only guarantee that the provided code will run as is, it cannot account for flaws embedded during the build process.

To mitigate risks, code must be rigorously tested and audited to eliminate bugs and prevent unnecessary information leaks. Additionally, reproducible builds play a crucial role, particularly when code is developed by one party and used by another. They allow anyone to verify that the enclave image matches the original source code, ensuring transparency and trust. Without reproducible builds, determining the exact contents of an enclave image, especially one already in use becomes nearly impossible, jeopardizing the entire security model of the application.

For example, the source code for DeepWorm (a project that runs a simulation model of a worm’s brain in a TEE) is fully open. The enclave image is built reproducibly using a Nix pipeline ensuring that anybody can build the Worm locally and verify the PCRs provided with the releases. In addition, they can ask the worm enclave running in a TEE for attestations and verify they match expected PCRs. Oyster itself provides Nix builds for all enclaves and enclave components.

-

Use audited or verified libraries

When handling sensitive data in enclaves, only use audited or thoroughly vetted libraries for key management and private data processing. Unaudited libraries risk exposing secrets and compromising your application’s security. Prioritize well-reviewed, security-focused dependencies to maintain data confidentiality and integrity.

-

Always verify attestations from enclaves

Users interacting with an enclave must validate attestation reports or verification mechanisms derived from them to ensure secure and trustworthy interactions. Without these checks, a malicious host could manipulate responses, making it impossible to differentiate genuine enclave outputs from tampered data. Attestation provides critical proof of the codebase and configuration running within the enclave, with PCRs serving as unique identifiers for the enclave’s codebase.

Attestations can be verified onchain (Intel SGX, AWS Nitro), offchain with ZK proofs (Intel SGX, AWS Nitro), by users themselves or a hosted service (as on t16z or Marlin Hub)

The bar for a TEE App should be remote attestation verified in smart contract and a reasonable number of experts LGTM'ing that verifier.

— Georgios Konstantopoulos (@gakonst) October 23, 2024

Same for ZK.

RA measurement ideally includes binary and eventually cloud vendor.

IMO everyone should work towards that.In scenarios where input parameters can alter the enclave’s functionality, verifying these parameters becomes crucial. By validating attestations and relevant configuration flags, users can ensure trust, security, and integrity in their interactions with the enclave.

E.g. The Worm contract allows enclaves to post updates only after onchain attestation verification with the required immutable measurements (the PCRs).

-

Check whether initialization parameters (if any) match expected params

Enclaves running the same image can exhibit vastly different behaviors or security guarantees depending on their initialization parameters. These parameters, depending on the codebase, can play a crucial role in defining the enclave’s operational and security characteristics.

For instance, an enclave with a debug mode enabled might allow certain endpoints to extract sensitive information, drastically altering its security posture compared to one without the debug flag. To mitigate such risks, users must validate initialization parameters (if any) against predefined and audited configurations to ensure the enclave operates as intended.

Use-case dependent suggestions

Based on the use case the application targets and how it is structured, the following tips could be useful in making your application safer.

-

Governance to update code running in an enclave

Just as smart contract updates require careful security practices, maintaining expected measurements in TEE-based applications demands equal attention. Measurements are critical to defining the integrity of the enclave’s codebase and configuration, and any changes to them directly affect the application’s security. Updates to measurements should be managed through structured processes, like governance frameworks or multisig schemes, tailored to the application’s security model. This ensures changes are deliberate, well-audited, and aligned with the system’s trust assumptions, safeguarding the integrity of the enclave and preventing unauthorized modifications.

-

Ensure interactions with the enclave are performed through secure channels which terminate inside the enclave

Enclave hosts are inherently untrusted, meaning they can intercept and modify communications. Depending on the use case, it might be acceptable for the host to read the data but not alter it, while in other cases, even reading the data may be undesirable. To mitigate these risks, establishing secure, end-to-end encrypted channels between the user and the enclave is crucial. At a minimum, ensure that messages include signatures to verify their authenticity and origin. Additionally, always verify that you are communicating with the correct enclave by checking its measurements and the authenticity of the key used for signing or encrypting data. This ensures the integrity and confidentiality of the communication.

E.g. Oyster has the ability to support secure TLS issuance through the use of CAA records and RFC 8657. In addition, it provides an enclave-native TLS protocol called Scallop which does not rely on Web PKI in case you need fewer trust assumptions.

-

Design the application to be resistant against grinding attacks

In cases where having the ability to prompt the model is necessary, an untamperable counter can be maintained by applications running in an enclave to ensure that responses aren’t selectively dropped. Every response should be accompanied by the corresponding prompt and counter value.

Additional precautions as suggested in the tweet thread below may be necessary based on the nature of the application:

perturb the model/prompt with something generated privately in the TEE, now even the dev can't fully grind against it

— Andrew Miller (@socrates1024) December 30, 2024 -

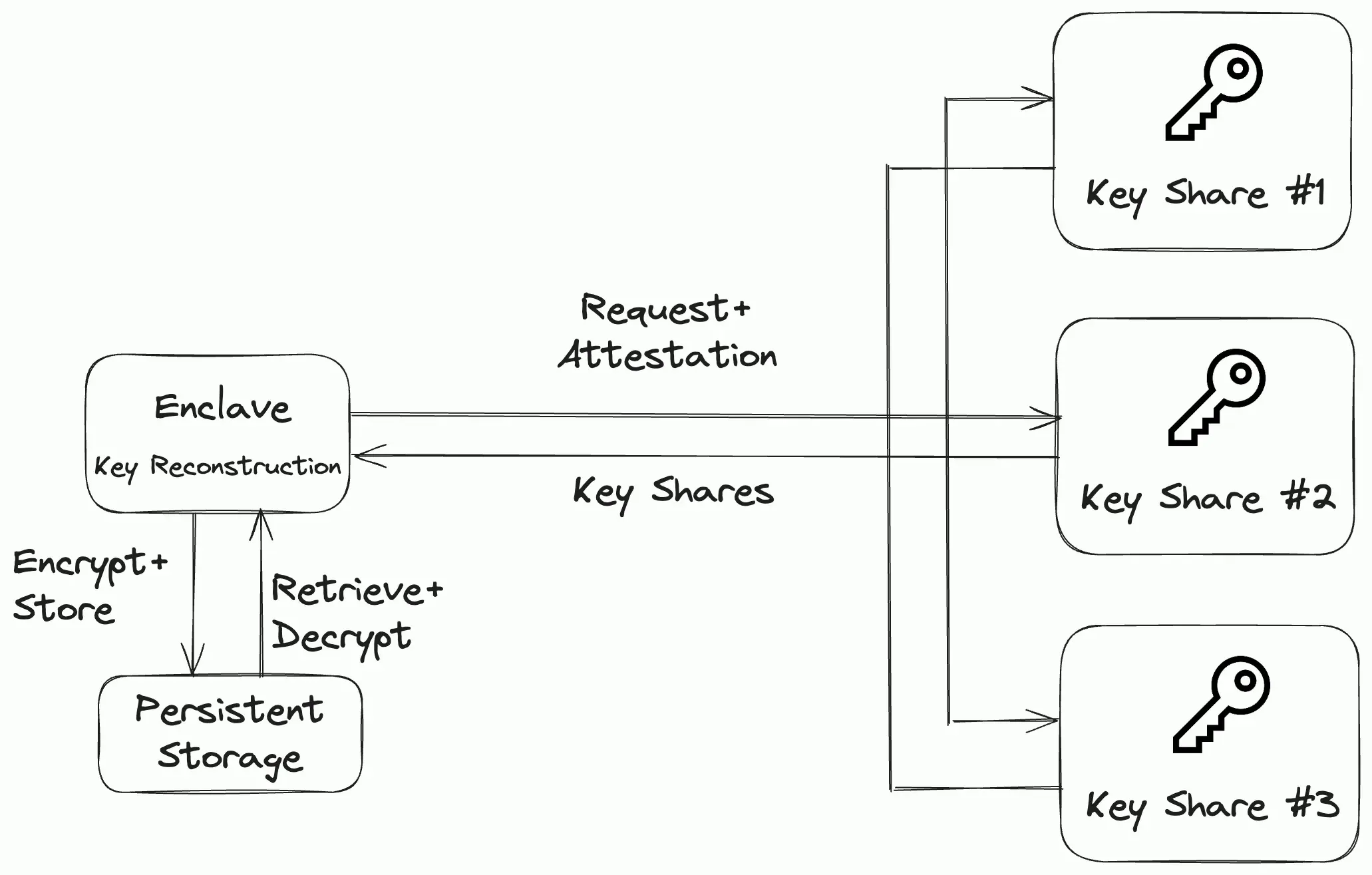

Know that enclave memory is transient

Enclave memory is transient, meaning its contents, including cryptographic keys, are lost when the enclave shuts down. Without a secure mechanism to persist this information, critical data could become permanently inaccessible, potentially leaving funds or operations in limbo.

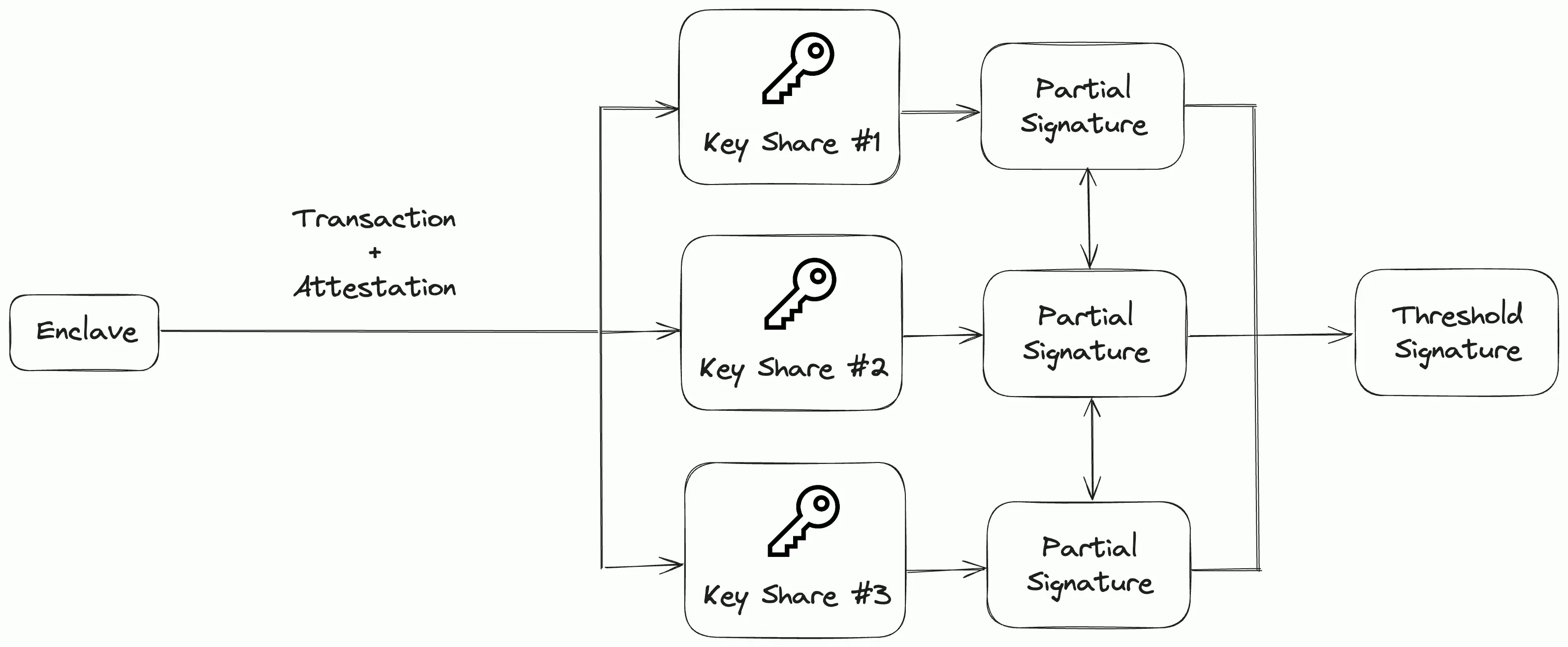

Multi-Party Computation (MPC) networks with decentralized storage systems like IPFS can be used as a practical solution for this issue. MPC networks split secrets across multiple nodes, ensuring no single node holds the full key while allowing the network to reconstruct it when needed. Data encrypted with this key can be securely stored on IPFS. When required, the MPC network can provide the key to a new enclave running the same image, provided specific conditions are met. This approach ensures resilience and robust security, maintaining data accessibility and confidentiality even in untrusted environments.

MPC-based reconstruction of keys.

An alternative approach when reconstruction of keys isn’t enabled by the MPC protocol is to have the MPC network sign on to blockchain transactions to unlock funds or perform a certain action. This approach is much less flexible and can’t be used to save API keys, passwords or arbitrary data (without a trusted third party storage service).

MPC-based transaction signing.

-

Sandboxing

If you are working with frameworks running within a TEE that allow user code to execute alongside the existing framework, it is essential to sandbox user code (like the EVM!). Using sandboxing environments like Docker, V8, or similar tools ensures that user code cannot maliciously alter the framework’s behavior or compromise its security guarantees.

For example, Marlin’s serverless framework uses V8 engine to isolate each serverless function, preventing malicious functions from interfering with the framework. This ensures that serverless functions cannot access keys used by the framework to attest that computations were executed correctly. By maintaining this separation, the framework preserves its integrity and security guarantees while allowing flexible execution of user-defined code.

-

Reduce attack surface area

For security-critical use cases, it is worth trying to reduce the surface area as much as possible at the cost of developer experience. For example, Dstack ships with a minimal Yocto-based kernel which only contains modules that are necessary for Dstack to work. It might even be worth using an older technology like SGX (over TDX) which doesn’t require a boot loader or operating system to be part of the TEE.

-

Physical Isolation

The security of TEEs can be further enhanced by physically isolating them from possible human intervention. While data centers and cloud providers are an obvious location to guard machines from being easily accessed, projects like Spacecoin are exploring a rather interesting alternative - the space. The SpaceTEE paper relies on security measures such as measuring the moment of inertia after launch to verify whether the satellite was tampered with during its transit to orbit.

-

Trustless TEEs

In contrast to the ‘guy with a glock’ model to operate TEEs described above, the open TEE effort led by Flashbots aims to make the TEE production supply chain auditable. It prevents backdoors from being sneaked in by the manufacturer, but possibly makes it easier for attackers to extract secrets from the TEE due to the lack of any security coming from obscurity (which can be ironically circumvented through physical isolation).

-

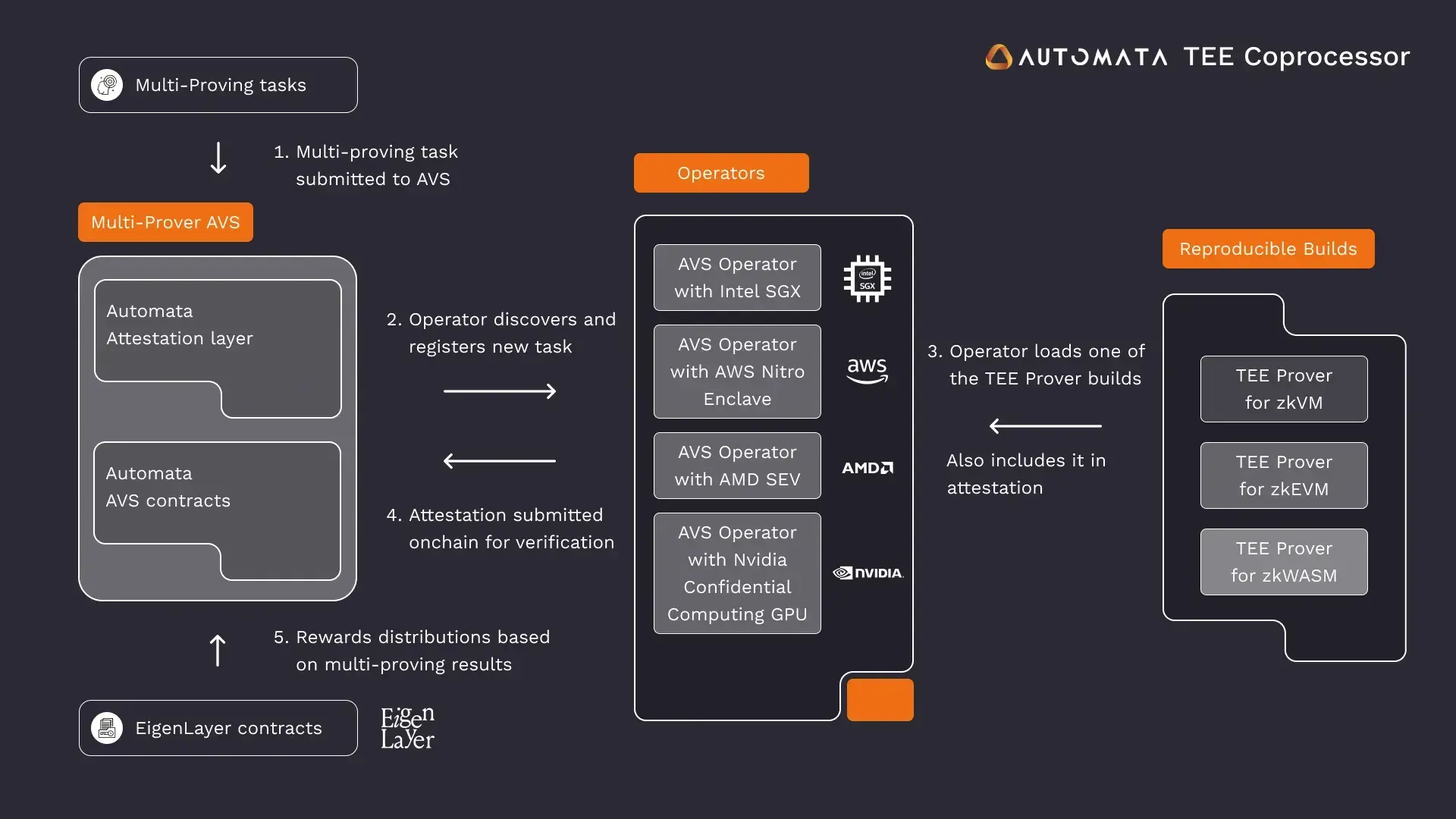

Multiprovers

Just as Ethereum relies on multiple client implementations to reduce the risk of bugs affecting the entire network, multiprovers uses different TEE implementations to boost security and resilience. By running the same computations across multiple TEE platforms, multiproving ensures that a vulnerability in one implementation doesn’t compromise the entire application. While this approach requires computations to be deterministic or a definition of consensus among implementations in non-deterministic cases, it offers significant advantages like fault isolation, redundancy, and cross-verification, making it a good choice for applications where reliability and trust are non-negotiable.

Automata’s Multiprover. Source

Missing pieces

There exists an inherent tension between the agile development process developers expect when using TEEs and the security measures that are required to build safe applications using them.

While this post tries to lay out the most common issues and precautions when working with TEEs, there still remain several open questions the developer community could think about:

-

In order to ease onboarding, the currently available SDKs by Flashbots, Phala and Marlin have gone the route of allowing code wrapped in Docker containers to be deployed in TEEs. However, this increases the Trusted Computing Base (TCB) and weakens the security guarantees of TEE-based applications.

-

Are there ways to create sandboxed environments such that users can be assured that certain ‘bad’ actions can not be taken without having to review the code? This would allow proprietary code to remain closed source and yet provide certain safety guarantees users expect from applications running in a TEE.

6) Open Source <> Private Source Code inside of a TEE is a large new area of exploration! In the above example we designed the matching engine to be closed source to protect alpha but we could still run a naive solver before submitting to ensure baseline invariants!

— dmarz ⚡️🤖 (@DistributedMarz) June 25, 2024 -

What does the ideal update cycle for TEE-based applications look like? Although we provide several qualitative pointers through the post, setting expectations explicitly could be helpful to the community at large.

-

What sort of tooling should be built to make continuous integration possible? Is it possible to have governance approve a canonical code version which is a github commit instead of measurements? Decentralized frontends could greatly benefit if such a streamlined process were established.

-

What do open-sourced TEEs look like, to what degree can the supply chain be audited and can they compete in functionality with the likes of Intel and AWS?

-

Finally, perhaps a lawyer can opine on whether a developer is indemnified from the actions of an AI agent it codes up and deploys

Looking ahead

Enclaves and TEE have clearly become an extremely exciting area of computing. As previously mentioned, the ubiquity of AI and its constant access to users’ sensitive data has meant large tech companies like Apple and NVIDIA are using enclaves in their products and offering enclaves as part of their product offerings.

On the other hand, the crypto community has always been very security conscious. As developers try to expand the suite of onchain applications and use-cases, we’ve seen TEEs become popular as a solution that offers the right trade-off of features and trust assumptions. While, TEEs are not as trust-minimized as full ZK solutions, we expect they will be the avenue that will slowly meld offerings from crypto companies and big tech for the first time.